Why Your App is Failing and How QA Testing Can Fix It

The question of “why apps fail” is an immensurably complex one, and there are probably as many answers out there as there are failed apps.

However, if you look closely enough, stories of failing apps often hit the same beats and points as each other. While no “failure” can honestly be attributed to a single issue or mistake, many keep stumbling at the same old hurdles that they have been for years. Those same hurdles can be much easier to spot and overcome with a proper QA framework.

In this article, we’ll consider some of the most common reasons behind failed apps. We’ll explain how to spot these issues, how to address them, and how strong software QA can help put your project back on track.

First, Recognize the Symptoms (What “Failure” Looks Like)

Failure means a different thing to different projects. Sometimes, it’s defined as not reaching a set of predetermined goals. Sometimes it’s as simple as “missing the mark” with the product itself.

Either way, you need to be able to recognise the symptoms of failure before you can address the underlying issues. Here is a list of common indicators that your app has problems with Quality Assurance.

- Crash rate spikes / low crash-free sessions – If the app seems to simply not work for a high number of users, it likely has fundamental issues that must not be ignored. An app that doesn’t crash is the bare minimum of quality, after all.

- Slow load times, jank, timeouts – Major performance issues such as these are often linked to inefficiencies and lackluster testing. While not always immediately visible, they can severely damage the app’s UX and core functionality.

- Broken user flows (signup, login, checkout) - If the path the user is intended to follow to achieve their goal is not smooth or logical, most users will simply stop bothering.

- Payment failures & API errors – Issues encountered during API communication can cause critical features to fail, resulting in lost users and decreased revenue.

- Negative reviews/ratings and rising uninstall rate – Every app is ultimately created to be used by actual people. If those same users are unhappy with the product, it’s obvious that there are major issues with it. Not addressing such concerns can quickly spiral out of control, which makes user feedback an invaluable resource.

- Feature regressions after each release – Without proper QA, previously working features often break after new code is added. This can leave your project stuck in a rut of technical debt and create a “whack-a-mole” scenario with your team trying to plug holes that appear faster than they can be fixed.

The list of symptoms we just described can be useful for diagnosing your bottlenecks, but it’s by no means exhaustive. Just remember that every project has its own failure states, which have to be outlined on a case-by-case basis.

Root Causes Most Teams Overlook

Recognising symptoms is great and all, but it’s merely the first step towards addressing app quality issues.

Some symptoms have a clear cause-and-effect relationship with certain elements of your software, which makes them easier to tackle. However, the root causes behind app-failure reasons are often not so easy to identify or address.

With that in mind, here are some common QA issues that developers often overlook:

- Unclear acceptance criteria & missing test cases – Properly defined criteria are the backbone of every effective QA test. On the flip side, vague test requirements can create confusion about what needs to be built, leading to features that don’t align with the intended functionality.

- Minimal or ad-hoc regression testing – Regression testing is not simply about protecting existing code from unintended effects of updates – it’s about future-proofing your product. As such, it has to be implemented in a structured, comprehensive approach.

- Device/OS fragmentation left untested – Apps should strive to be as accessible to as many people as possible – especially if they’re built with mass market appeal in mind. However, this can lead to compatibility issues in software, especially in mobile apps, if not adequately addressed during testing.

- API contract drift, flaky integrations – Contract drift simply means that the API’s specifications are different from its actual system behavior. It can be notoriously difficult to troubleshoot, leading to broken integrations and major inefficiencies.

- Performance blind spots – Some areas of an app’s functionality are not immediately apparent to developers or users, but they can cause major issues down the line. For example, an app’s inability to handle load spikes can go unnoticed for a long time before causing a complete breakdown.

- Security holes from skipped checks (SAST/DAST) – A lack of comprehensive security testing can leave your app with significant, exploitable weaknesses. Testing needs to be performed both before (SAST) and during runtime (DAST).

- No release gates in CI/CD, no feature flags/canary releases – Inefficient QA pipelines often skip important parts of the whole process, such as setting up proper release gates. The effects of these omissions are often not immediately evident, but they can build up to disastrous consequences.

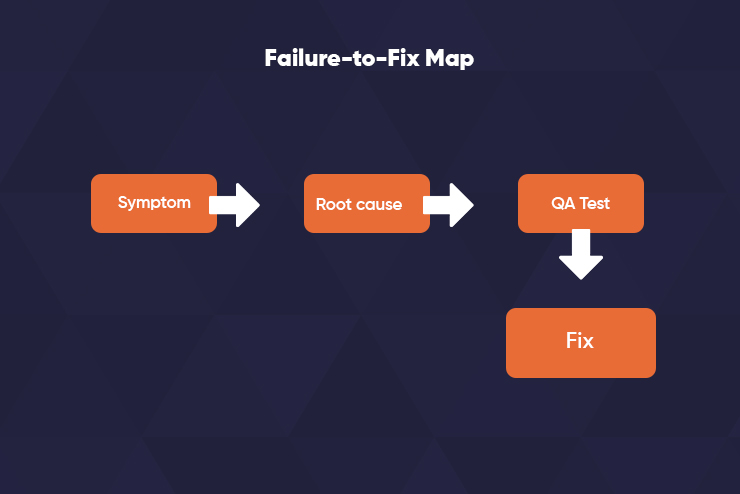

Symptoms, Causes, and QA Methods that Fix Them

Now that we’ve looked at some common symptoms and causes of app failure, it’s time to address the all-important question: how do we fix them?

The honest answer is that it depends on the project, its requirements, and its resources. However, common problems often require standard solutions, so there are still some easy recommendations we can point out for specific issues.

| Symptom | Likely Root Cause(s) | QA Tests That Expose It | Fix / Action |

|---|---|---|---|

| Frequent crashes | Uncaught exceptions, memory leaks, device-specific issues | Crash reproduction scripts, device matrix tests, instrumentation, exploratory tests on target devices | Add crash reporting, fix leaks, expand device coverage |

| Slow screens/timeouts | Inefficient queries, heavy assets, and network variability | Performance profiling, API latency tests, network throttling, load tests | Optimize queries/assets, CDN, caching, circuit breakers |

| Checkout failures | API contract mismatch, payment gateway edge cases | Contract testing (Pact), API e2e, negative test cases | Align contracts, add retries/idempotency, improve error handling |

| Buggy releases | No regression suite, no gates | Automated smoke + regression in CI, release gates | Block release on critical failures; add feature flags |

| 1★ reviews & churn | UX friction, accessibility gaps | Usability testing, accessibility audits (WCAG), analytics funnel checks | Fix UX blockers, address A11y issues, re-test with users |

The table above outlines some common app issues, the root causes behind them, and QA methods that fix them, while the image below shows a Failure-to-fix Map.

The QA Toolkit That Turns It Around

For better or worse, software QA is not always a matter of quick, one-to-one problems and solutions. It involves methodically figuring out the root causes behind problems and then addressing them with the proper tools.

As such, it’s important to understand which tools are used in Quality Assurance and what their intended purpose is. Here are some examples of the tools, tests, and methods used to save failing apps using QA:

- Functional & Regression Testing: These types of tests ensure that the most basic features and functions of the app work as intended. They can be further broken down into:

- Unit tests, which test individual components of the software

- API tests, which focus on API integrations and proper communication between different components

- UI tests, which ensure that users can properly interact with the app.

- Performance & Reliability: As the name implies, these tests ensure that the app performs well and efficiently manages resources. Many performance tests, such as Load and Stress tests, focus on examining the performance under irregular and unfavorable conditions.

- Compatibility & Device Matrix: These methods are all about ensuring stable performance and functionality regardless of the device or its hardware. The high variance of operating systems, screen sizes, and hardware capabilities makes this especially tricky for mobile-centric apps. The matter is further complicated by the growing body of legacy hardware and the increasing importance of low-end devices on the market.

- Security & Compliance: This form of QA testing checks whether the app is able to safeguard sensitive information and withstand cybersecurity threats. It employs a variety of tools, including both static and dynamic tests, secret scanning tools that expose vulnerabilities, and dependency checks.

- Accessibility & UX Validation: These tests focus on the human element of app usage, ensuring users can interact with the software smoothly and intuitively. This includes anything ranging from ensuring a proper color contrast to optimizing the user interface. Though often seen as surface-level and cosmetic, proper UX testing can vastly improve customer satisfaction and boost ratings.

- Analytics-Backed Testing: An effective QA pipeline is set up to be able to tackle new issues as they arise. For example, relying on crash logs, heatmaps, and funnels to identify bottlenecks and prioritize certain tests or actions. This generally requires setting up a proper feedback loop.

- Automated Testing – QA automation can be an effective way to increase test coverage and reliability, plugging holes that you might have otherwise missed. However, knowing when to automate QA is more difficult than it sounds. Automation is a big trend in modern software QA, but it’s not a one-stop solution to issues.

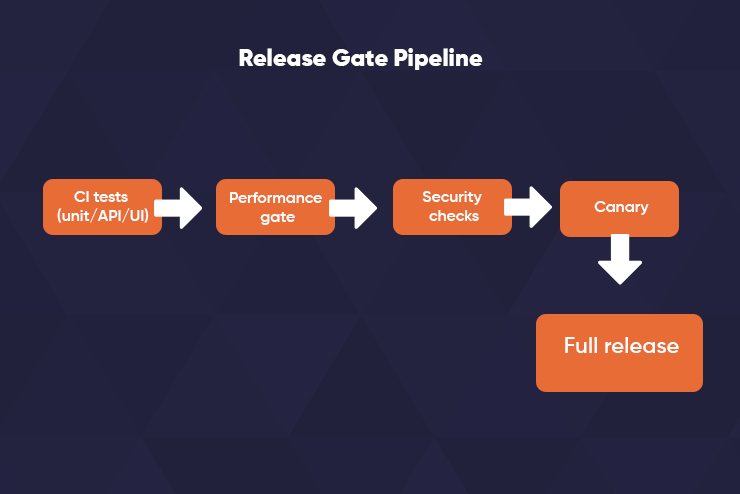

How to Build a Release Pipeline That Prevents Failure

Most QA experts agree that prevention is the best QA strategy for apps. After all, the cheapest way to solve a software issue is to not have it in the first place.

The methods and philosophies for actually preventing app failure differ, however. There are many approaches that teams can take, and their validity often depends on the specifics of the project.

However, we can still offer some advice on setting up a long-term QA operating model that focuses on preventing critical issues from spiralling out of control. Here are some common techniques that QA teams implement to prevent app failure:

- CI/CD with Release Gates – Release gates are essentially checkpoints that ensure the code is sufficiently stable and meets a set of standards before release. They define criteria that must be met at specific stages of the development pipeline. Failure to meet those criteria automatically blocks further progression, preventing issues from reaching the live version of the app. Non-critical issues are often not blocked but instead simply warn the QA team and suggest the next step.

- Feature Flags – Otherwise known as feature toggles or switches, this technique allows developers to instantly enable or disable specific features or elements of the app during runtime. The whole idea is to be able to control the app in a production environment without requiring code changes or deployments. This is commonly used for gradual, controlled releases of new features, often starting with a small group of users. Feature flags are a great way to prevent regression issues and minimize the risks of rolling out new app versions**.**

- Flaky Test Triage – A Flaky Test is a QA test that produces inconsistent results. Rather than failing to meet acceptance criteria, flaky tests sometimes meet them, and sometimes don’t. This can be an especially tricky problem to tackle because it undermines confidence in the test itself, rather than the software. Flaky Test Triage is the process of addressing these issues. It often involves observing the flaky tests, quarantining exposed features, and a complex implementation of specialized tools.

- Definition of Done (DoD) including QA – the DoD refers to a shared, clear goal of what is considered a complete version of the app that’s ready for release. It must also include QA checks and metrics, encompassing various testing types to verify functionality, performance, and other quality attributes. Any attempts to fix a “failing” app must also outline clear steps, goals, and standards.

Sample Deployment: The 14-Day Stabilization Plan

So, you’ve decided to tackle your app quality issues using the methods we’ve outlined above. What now?

The exact process and implementation of a QA strategy will inevitably depend on the specifics of the case. However, we’ve outlined a sample plan that should give you a rough idea about what the fixing process would actually look like.

- Days 1–2: Triage top 10 crashes and top 3 broken flows from analytics.

- Days 3–5: Implement crash/smoke suites; add API contract tests for critical paths.

- Days 6–9: Performance profiling of slow screens; optimize low-hanging fruit; run load test on key endpoints.

- Days 10–12: Add release gates; enable feature flags for risky components.

- Days 13–14: Regression pass; hotfix verification; push canary → full rollout.

- Deliverable checklist: compare results to metrics, such as crash-free percentage target, p95 latency target, zero critical blockers, and regression summary.

When to Call in Reinforcements

It’s important to note that this article simplifies software QA to make it more understandable. Actually implementing these techniques and achieving measurable results with them is by no means an easy task.

With that in mind, it may be time to consider calling in some backup by outsourcing your app’s QA. There are many reasons to outsource QA, but here are some scenarios in which looking for outside help is often the best course of action:

- Urgent fixing required – Failing apps want to address their issues as quickly as possible so that they don’t become even worse. Building or re-structuring your pipeline in-house can take months. On the other hand, a specialized, experienced team of QA experts such as TechTailors can minimze the time required to identify and resolve QA bottlenecks.

- Team skill gaps – QA issues are often tied to project-specific needs that require a highly specialized set of skills. Moreover, QA audits often reveal new needs and requirements that your team may not be able to immediately meet. Outsourcing can quickly fill those gaps, ensuring the job gets done right and on time.

- Testing sprints and audits - In Agile and Scrum methodologies, QA testing is applied throughout the development process and not just as an end-phase. However, this requires staff and resources that may not be immediately available, especially for small and medium-sized companies.

- Long-term projects and managed QA – Big, ongoing projects may require an entire team of QA experts working full-time to ensure quality. In this case, bringing in outside help is a matter of saving money. A managed QA agreement can often be a more affordable way to keep long-term testing projects running smoothly without compromising on quality.

Conclusion

The reasons apps fail are typically symptoms of bigger underlying issues. Feedback is often based on the most obvious user-facing problems, but tackling those same problems requires a comprehensive methodology for diagnosing and resolving QA failures.

A proper QA pipeline ensures that your project is not just putting out fires as they come up. Instead, it tackles the root causes that prevent your app from reaching its goals in terms of both quality and functionality. Moreover, it sets up a system that can reduce app crashes, resolve quality issues, improve stability, and fix bugs with speed and efficiency.

If you need help identifying the root causes of your app’s problems, book a free QA consultation with TechTailors and start getting your app back on course.